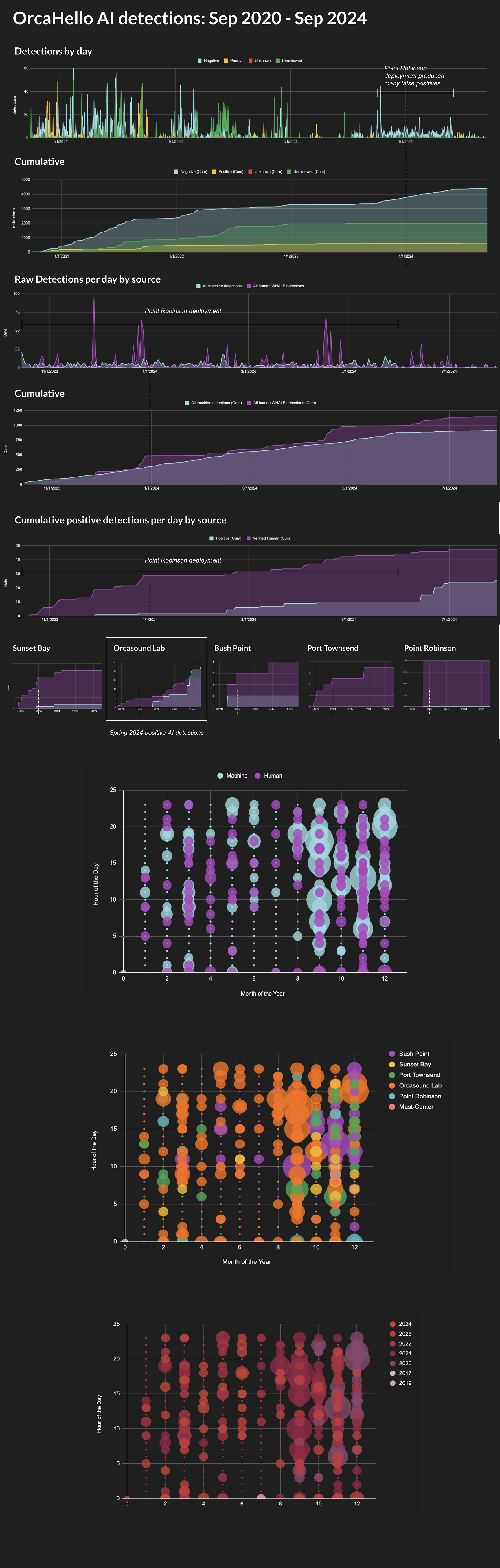

Working with data from the Orcasound hydrophone network in the Puget Sound / Salish Sea, I analyzed and compared reports of orca activity made by an AI audio interpretation model versus an online community of citizen scientists. The results let to immediate insights into the improvement of both the AI model and the human listening experience.

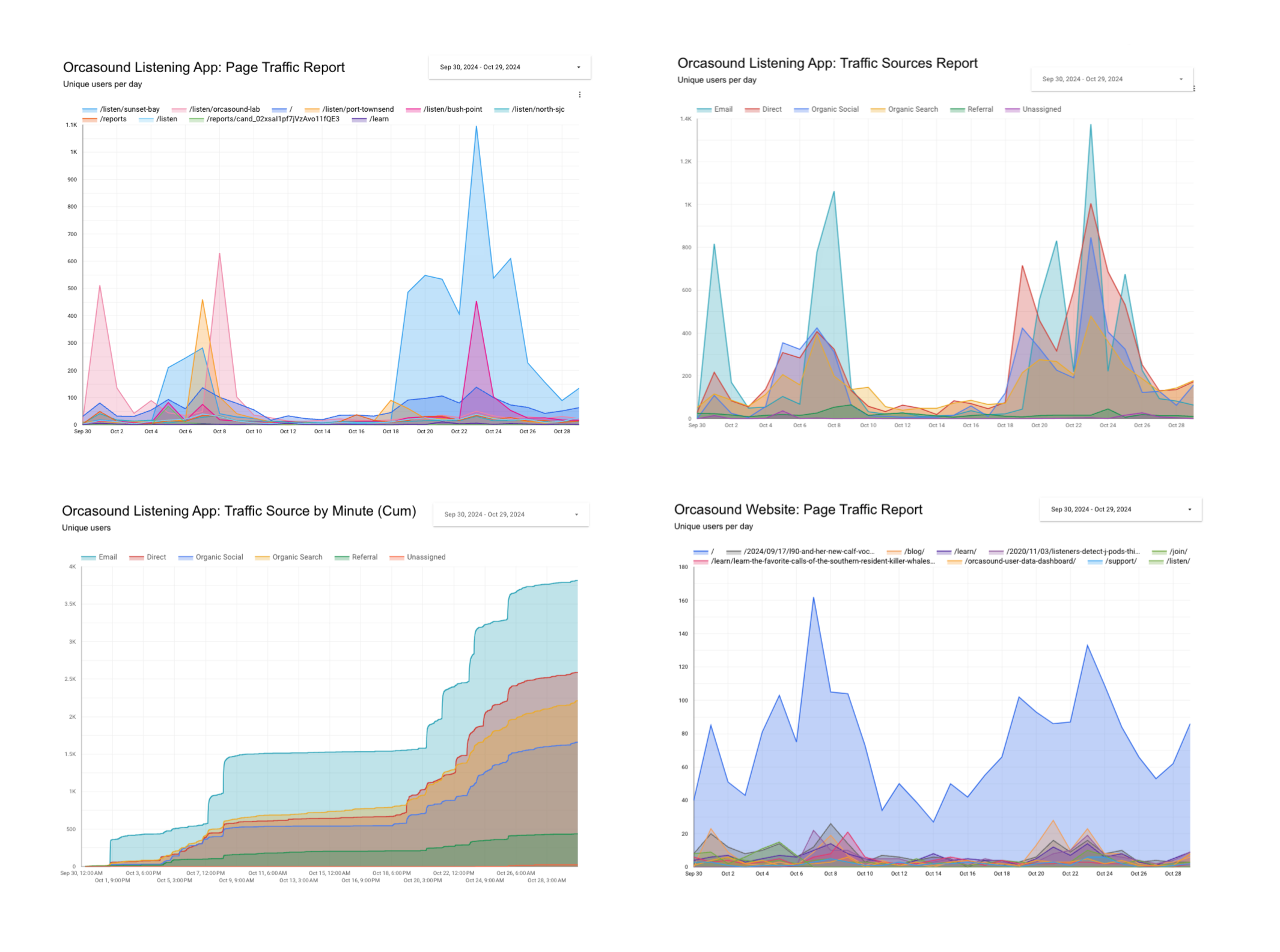

User analytics reports showed that the citizen science community consistently shows up strongly when there is a live listening event, even without receiving an alert, using networking platforms like Facebook and Whatsapp to spread word of orca sightings and movements.

A careful comparison of the data shows that the AI model, trained to detect orca calls from audio based on training data provided by professional scientists, has not performed as well as citizen scientist community listeners. An important reason for this is the greater context human listeners have from other data sources, such as sighting networks, as opposed to pure audio.

Nomad Health's design system was an ever-evolving work in progress resulting from the combined efforts of the UX design and front-end engineering teams. Implementing the system required auditing inconsistent and ad-hoc code from across many areas of the product, and establishing a set of shared resources available in both Figma and Github. The outcome was a streamlined development workflow with fewer quality control issues and more nimble product iterations that improved team productivity by 20%.

The use of "design tokens" were key to the success of the design system, giving the app's React code base a way to update styles and components in a cascading way from a single source of truth. Designers could access a global library of components, with one-to-one mirroring between Figma and code.

As a core member of the design team at Nomad Health, I helped build the UX Research function, working to establish in-house procedures to effectively conduct and document research insights for use across the organization.

As part of this effort, I conducted original research on both sides of Nomad's two-sided marketplace, connecting storylines between facility managers and traveling clinicians. For each study, I compiled a results deck with summaries of actionable findings.

Product team members at Nomad continually needed to see how insights from user interviews connected to business goals. I iteratively re-examined personas and user needs with each study, and made proposals based on accumulated insights.

As the first full-team design hire for a new product initiative, I took advantage of an on-site engineering summit at Nomad's office in Charlotte to work with my team in person and conduct a UX design thinking workshop.

Prior to the workshop, I prepared a visual summary of research done on the initiative's target users to date.

Through user interviews with more than 20 managers, the most important pain points in the travel nurse hiring process were:

To build empathy for users, I divided the 12-person team into small groups, each assigned an exemplary persona from the user interviews. Each group had an hour to write a story about their persona, following a hero's journey structure.

After each group shared its story, I led the group in narrowing down a user need statement that we could all agree rang true for the people we were writing about.

In addition to building team camaraderie, the exercise led to building momentum for new product features, including Bill rate insights and Market analytics.

Building on the success of our Bill Rate Insights project, and taking learnings from our Team Vision workshop, my team decided to address the need for data insights in the travel nursing market at the level of healthcare facility executives and decision makers.

Our proposed Market Analytics dashboard tool delivers actionable budget planning guidance for healthcare finance teams, including predictive trends and competitive intelligence based on Nomad's industry-leading database of travel nursing jobs.

Nomad's B2B product allows nurse managers within healthcare facilities to manage their job postings for travel nurse positions. For each job posted, managers can see the number of views by nurses on the Nomad platform, and evaluate applications as they come in.

Through user interviews with more than 20 managers, the most important pain points in the travel nurse hiring process were:

While Nomad's MVP facility product created time efficiencies for managers in processing applications, my team needed to find ways to continually improve our offering to solve for our users' most pressing needs.

User stories

Outcome

We designed and shipped the bill rate insights feature within one quarter, with the outcome of increasing sales opportunities with new customers and landing the product's largest health system to date.

Applying to traveling clinician jobs can be an arduous process, requiring many forms and certifications. One of the benefits for users in registering for the Nomad platform is the ability to re-use application elements when applying for new jobs.

I developed a 'one-click apply' flow that allows users to apply to all jobs in the system that match an application they have already made. We designed, tested, and launched the feature within one quarter, resulting in a 30% increase in job applications.

Building on this success, we beta-tested a new 'application auto-pilot' program, allowing users to opt in to have Nomad submit applications to jobs on their behalf. To increase intake into the program, we integrated the auto-pilot sign up into the one-click apply flow, resulting in 1.5x increased participation.

In growing Nomad Health's business-to-business (B2B) capabilities, an important goal was to give internal account managers the ability to run promotional campaigns to boost applications for specific blocks of jobs.

My role was to design an in-product merchandising experience that would add value for both users and business partners.

For this project, a rapid ideation approach was needed, generating many options that could lead to novel solutions. One of the first challenges to overcome was a negative reaction on the part of users as well as stakeholders to the idea of adding advertising to the product, which many saw as an anti-pattern. At the same time, various strategies were already underway to boost applications across all jobs on the platform, so to give promoted jobs an additional edge required a unique approach.

Ideation and testing with users revealed many insights that helped to define how a merchandising flow could serve user needs. Users shared a number of pain points that could be uniquely addressed in a promotional strategy.

As a growing startup, a key business goal for Nomad Health was to increase its registered user base. The inbound search feature performs an important role in onboarding new users by allowing them to search Nomad's job inventory before committing to creating an account.

Working with my product partners, I began by defining critical elements of the user flow to create a useful, high-impact call to action for the site's home page.

Through iterations, we identified ways to quickly connect new users with the most relevant jobs possible, in order to boost new registrations as well as job applications.

Due to the complex nature of healthcare work, users filter their search by multiple factors including discipline, specialty, and location.

In user testing with travel clinicians, we learned that the first question users need to answer is whether the platform supports their discipline track they are trained and certified in. To commit time to the platform, the user needs to know quickly if it staffs for the roles they can work in.

One of Nomad's competitive strengths as a platform is that it supports a broad range of disciplines, allowing people from many backgrounds to find travel work. Asking for the discipline up front works in Nomad's favor as an opportunity to market its broad jobs inventory.

The result of this user flow was to increase new registrations by 20%, especially among the longer list of Allied Health disciplines.